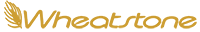

Layers Software Suite. Mixing, Streaming, and FM/HD Processing in an Instance.

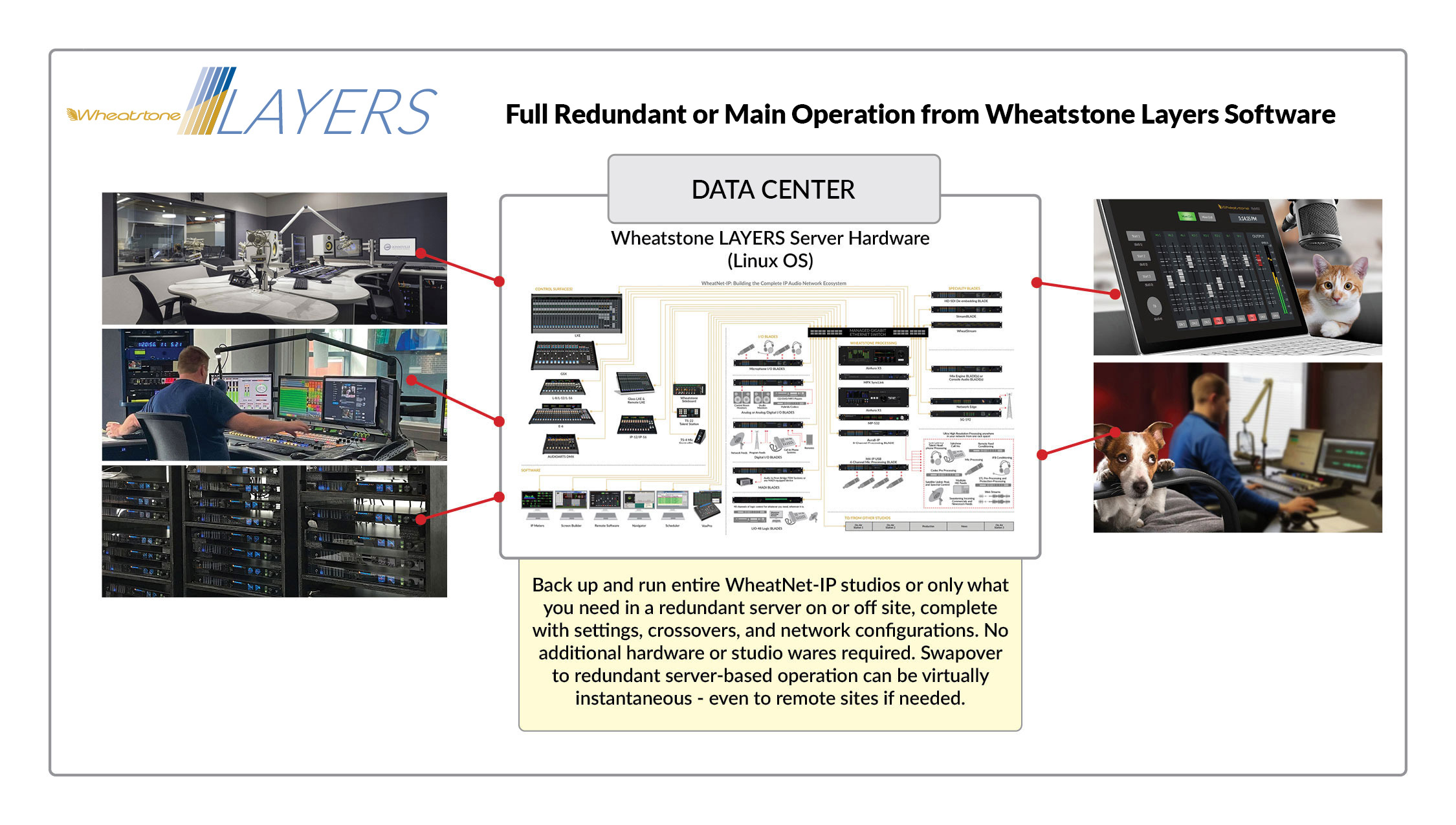

Introducing Layers Software Suite with Wheatstone SystemLink®. Add mixing, FM/HD processing and stream instances to any server in your rack room or off-site at an AWS or other cloud data center. Available with Wheatstone SystemLink® option, an FM MPX over IP transporter with RIST connectivity for robust, secure and reliable transport over any IP link.

Radio World Best of Show 2024 for our Layers virutalization with MPX over IP transporter to create the infinite studio capable of sharing media, workflows and resources across distances.

Layers Software Suite. Layers Mix, Layers FM, and Layers Stream in an Instance

- Spin up or down streaming, FM/HD processing and mixing as needed, your server or AWS’. Pay for only the cloud services you use.

- Add to any server in your rack room or off-site at an AWS or other cloud extension of your WheatNet-IP audio network.

- Extend studio failover redundancy across multiple AWS or Google cloud data centers. No additional hardware infrastructure required.

- Centralize audio processing for all streams and FMs on-premise or offsite at cloud data centers. Finally, the best ears in your organization can set audio processing for each stream and each FM with MPX out to the transmitter.

- Consolidate mixing, processing and stream provisioning for several stations and studios into one server location for shared resources and redundancy.

- Replace racks of processors, PCs and mix engines. One onsite server can run multiple Layers mixing instances for several consoles throughout your facility, plus serve Layers FM audio processing instances as well as provisioning, processing and metadata for multiple streams out to the CDN provider.

- Combine the scalability of Linux and enterprise technology with the deep reliability of WheatNet-IP AoIP, mixing, processing and streaming software designed by a dedicated, broadcast-only company.

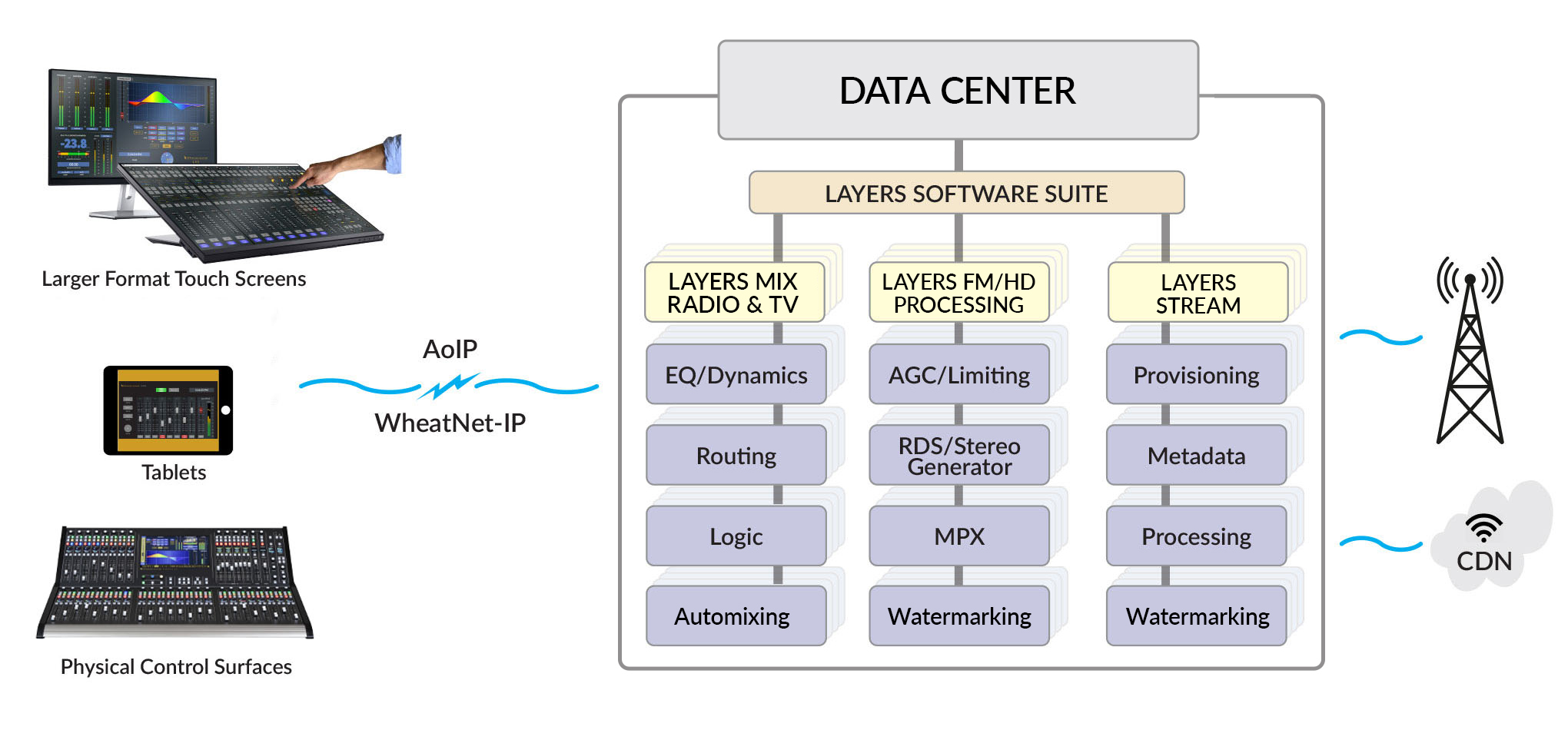

Layers Glass Software

For the laptop, tablet or other glass surface includes full-function radio and television virtual consoles with familiar buttons, knobs, and multi-touch navigation and menuing. Layers Mix handles all the mixing and processing required by the control surface, virtual or fixed, for radio and television applications.

Layers Mix includes full-featured mix engine and Glass virtual mixers for the laptop, tablet or touchscreen monitor.

Layers Mix Engine:

- Spin up mixing instances for all your LXE or Strata glass or console surfaces and replace racks of hardware.

- As many as 20 mix engine instances in one on-premise server, saving racks of mix engines and the associated engineering, electrical and real estate space.

- Native IP audio integration with all major production automation systems (TV)

- Native IP audio integration with all major playback automation systems (radio)

- Linux based OS with touchscreen support accessible via HDMI video output

- Full screen XY controller for routing destination and source feeds

- Signal sources and destinations limited only by the size of the network

- Manage via virtualization software like VMWare or container software like Docker, on-premise on your own server or onsite by cloud provider data centers

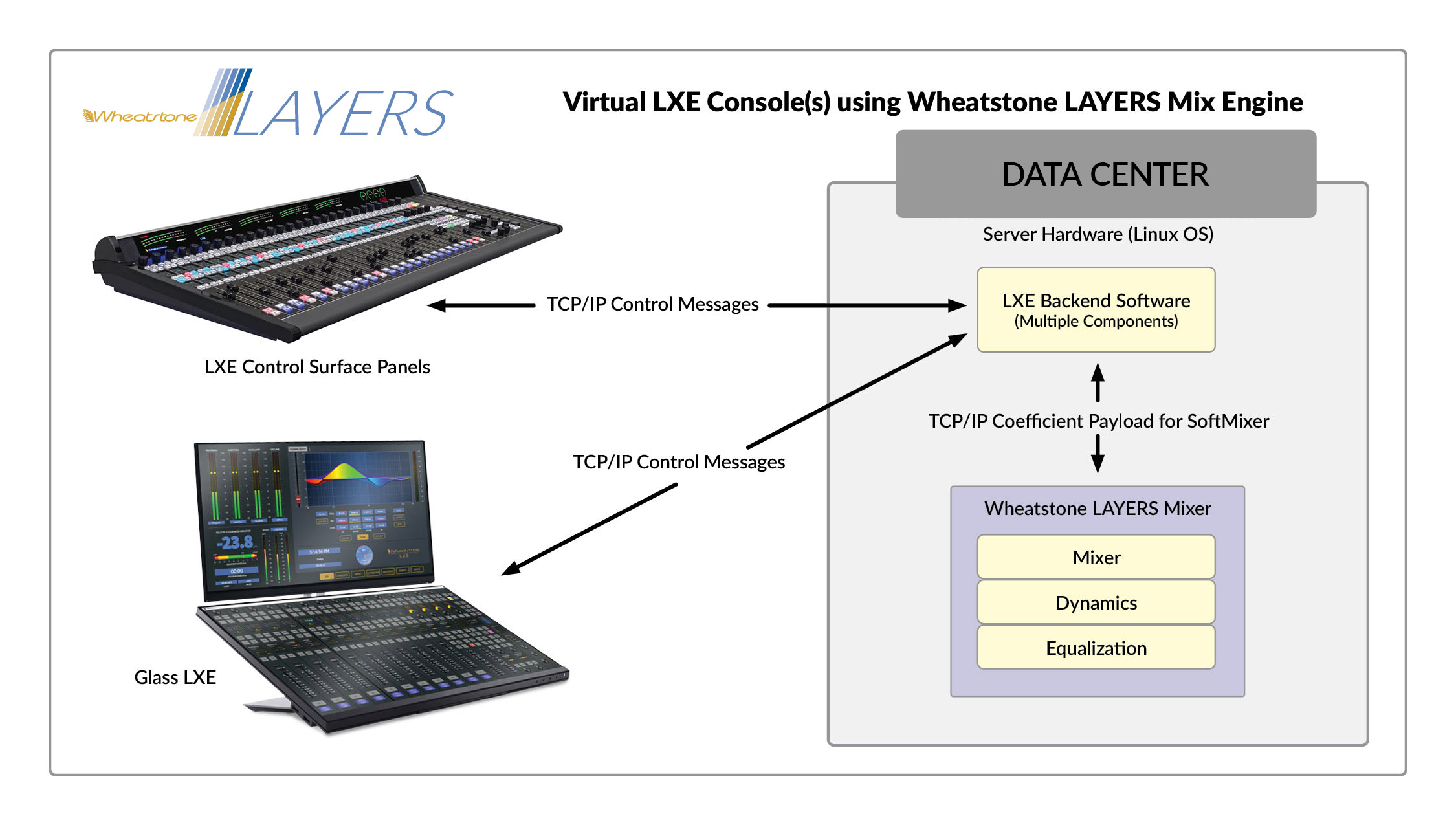

- Works with fixed console surfaces such as LXE and Strata as well as Mix Glass for laptops, tablets and PC monitors

- Manage multiple instances via virtualization software like VMWare or container software like Docker, on-premise on your own server or onsite by cloud provider data centers.

Layers Mix Glass for laptops, tablets and PC touchscreens

- Complete virtual console interface with familiar navigation, menuing, fader movement and controls for setting EQ curves, filtering, and other settings

- Predetermined presets ideal for television or radio workflows

- Unlimited subgroups, VCA groups, aux sends and bus, mix-minus, channel and output assigns

- Unlimited cue feeds as required

- Dynamics, including compressor, expander and gate, controlled via touchscreen

- Full parametric EQ controlled via touchscreen

- Loudness meter, phase correlation included

- Unlimited number of events that can be created, edited and recalled as snapshots of the control surface

- Prebuilt screens for metering, clocks, timers, dynamics, EQ assigns

- Easy to use scripting tools for customizing screens

- Runs on any Linux OS laptop, tablet or PC client from an on- or off-premise server or third party cloud provider

- Multi-access, multi-touch and synchronized between multiple operators sharing board duties during fast-paced, remote productions

- Real-time fader tracking and live synchronization of buttons and controls for separate operators connected over an IP connection

- All sources accessible in the WheatNet-IP audio network

- Ultimate in smart control with options like Automix for automatically reducing gain on mics nearby an addressable mic

- Control devices and trigger events anywhere in the WheatNet-IP audio networked studio.

Layers FM/HD Processing. Centralize processing settings across multiple markets via virtualization software like VMWare or container software like Docker, on-premise on your own server or onsite by cloud provider data centers.

Each processing instance includes:

- Multiband AGC utilizes AI for smooth transitions between commercials and programming

- Limitless clipper performs perfect peak control without generating annoying IM distortion

- Specialized bass management tools deliver accurate, articulate bass regardless of program format

- Exclusive Wheatstone Unified Processing means each stage in the processing chain intelligently interacts with the other processing stages to create a dynamic and dominant on the dial presence.

- Multipath mitigation for delivering quality programming to more radios

- Full RDS capabilities with data import from popular automation systems

- BS412 MPX Power Management assures compliance with European standards

- Intelligent stereo enhancement for a consistent stereo sound field experience

- Nielsen audio software encoder included. The PPM watermark is inserted after all multiband processing to deliver a consistent and robust watermark

- Part of the WheatNet-IP audio network, a complete AoIP ecosystem of 200+ interconnected devices and elements

-

Available with SystemLink® FM MPX over IP transporter with RIST connectivity for robust, secure and reliable transport over any IP link.

Layers Stream features key Wheatstone AoIP, audio processing and codec bandwidth optimization technology.

- Audio processing designed specifically to optimize the performance of audio codecs:

– Five-band intelligent AGC with AI for a smooth transition between commercials and program content. iAGC uses program-derived time constants to establish tonal balance and level consistency rather than aggressive attack and release times that can interfere with codec performance.

– Two-band final limiter is optimized for streaming and avoids the hard limiting and clipping which can interfere with codec performance

– Stereo image management preserves an artistic stereo image while preventing over-enhancement that can skew the codec algorithm

– Bass boost and monaural bass features for optimizing the quality of the bit stream

– A selectable six-band parametric equalizer with peak and shelf function

– Two stage phase rotator to correct voice asymmetry

– High and low pass filtering can remove noise and hum to optimize codec bit performance

- Selectable AAC, Opus, and MP3 encoders and a wide range of bit rates for reaching a broad range of end user devices and players

- BS.1770-compliant loudness control manages loudness between -24 and -10 LUFS

- Cloud-ready and compatible with standard CDN and streaming platforms. Supports HLS, Icecast, RTMP, and RTP streaming protocols

- Direct a stream from anywhere in the world as a cloud instance that can be brought up in a second and torn down in a second. Pay per use.

- Lua transformation filters convert metadata input from any automation system into any required output format for transmission to the CDN server

- Nielsen audio software encoder included. The PPM watermark is inserted after the dual-band limiter of each stream for a robust, consistent signal that can be picked up by the PPM without interfering with the performance of the audio codec

- Part of the WheatNet-IP audio network, a complete AoIP ecosystem of 200+ interconnected devices and elements

- Wheatstone’s streaming appliances and Layers cloud streaming software are now available with Triton Digital’s streaming protocol

Servers as Gateway to the Cloud

By Dominic Giambo, Engineering Manager

Whatever your future facility might look like, it most certainly will involve software and a server or two. In his presentation given during the 2022 NAB Broadcast Engineering and Technology (BEIT) conference, Wheatstone Engineering Manager Dominic Giambo talked about servers as the gateway to cloud.

By moving broadcast functions from application-specific hardware onto a general-purpose server, you can replace racks of hardware and readily serve up any of those functions to any point in the AoIP network. In the case of our Layers software suite, for example, one Dell or HP server can run multiple mixing instances for several consoles located throughout the studio facility, plus serve FM/HD audio processing with full MPX out to the transmitter as well as provisioning and metadata for multiple streams out to the CDN provider.

All these resources and apps can reside on one server run by one Linux OS. By having another server at the ready, you also have the ultimate in redundancy. Using built-in failover features available in any modern AoIP system, you can seamlessly switch to a redundant server in the event of a failure. Eventually, as cloud technology becomes more prevalent and interconnect links become come reliable, you can move all or some of these applications or resources offsite to be managed by a cloud provider.

At any time, you can add virtualization to host the software locally on your server or stack of servers. This could allow you to use part of the same server for automation without a change to the architecture. A nice feature of virtualization software like VMWare is it decouples the software even more from the hardware; just copy VMWare and run it along with your applications on any similar server or desktop. Another alternative is to go to a container model using something like Docker, which gives you a number of services running on a container engine. In this model, you don’t have the overhead of the OS running each and every instance, as is the case with VM host software, and it’s just one container engine running the containers for the services you need.

Whatever direction you decide to go with your broadcast facility, it all starts with a server and the right software.

How Future Cloud is Changing Studio Buildouts Now – Q&A with Dominic Giambo

Q: Why a server first approach?

DG: This gives us the beginnings of virtualization in the sense that you can offload some of the functions performed on specialized hardware with instances of software on enterprise servers. There is really no need to invest in a big architecture migration plan. Just about every modern station has or will eventually have servers, which can be used to run a virtual mixer or instances of audio processing to the transmitter site or out to a stream. The work is simply moved onto a server CPU instead of having that work performed on a dedicated hardware unit, and as commodity hardware, servers tend to get more powerful quicker than a dedicated piece of hardware that might be updated less frequently.

This approach buys you greater flexibility for adding on studios, sharing resources between regional locations, and even for supply chain interruptions that might occur with hardware only. We can use enterprise servers to do what we might have done with specialized hardware in the past, and that is only going to get more flexible and affordable as time goes on.

Q: Why now?

DG: The benefits can be significant, from not having to maintain specialized hardware and all the costs associated with that − like electrical, AC, and space − plus there’s a certain adaptability with software that you just can’t get with hardware alone. The next step might be to go to a container model with the use of a containerization platform. Containerization doesn’t have the large overhead that you find with virtualization per se, because you can run a number of different containers that share the same operating system. For example, one container could host WheatNet-IP audio processing tools, while another could host the station automation system, each totally isolated yet run off the same OS kernel. Should you decide to move onto a public cloud provider like Amazon or Microsoft, these containers can then be moved to that platform. Containers work well on just about all the cloud providers and instance types. Most providers even offer tools to make it easy to manage and coordinate your containers running on their cloud. Software as a Service applications available now, that consolidate functions and don’t have critical live broadcast timing requirements, are replacing rows of desktop computers and racks of processing boxes. Streaming and processing are good examples. Migrating these to a cloud environment is straightforward if you already have them running on your servers. The hard work is already done, plus you’ll still have the servers for redundancy or testing and trying new ideas.

Q: What about mixing in a cloud?

DG: Playout systems are moving to the cloud and that makes a good case for the mix engine to also move to the cloud. Latency is always going to be an issue with programming going into and out of a server (or cloud) if it’s far away, but there are effective ways to deal with this. For example, I can see how a local talk show might still be mixed locally in order to preserve that low latency needed for mic feeds into that mix, but it might be mixed using client software that is based in the cloud or regional server for general distribution.

But we need to keep in mind that while some industries can be more tolerant of service interruptions, live broadcast is not one of them. We need to take this in steps. We may be able to get many of the benefits of cloud by moving towards a software-based approach first, such as running this software on servers with ever increasing power and then distributing the control of that equipment remotely using virtual consoles. In a later step these same servers could be re-tasked and used in redundant backup scenarios alongside cloud resources to mitigate the security risks of a cloud-based approach.

Q: What else can you tell us?

DG: For most broadcasters, virtualization or cloud isn’t the goal any more than adding a new codec or AoIP system is the goal. The great thing about software virtualization is that it uses enterprise commodities, which will inevitably find their way into studios and rack rooms. In that sense, the planning has already been done, so now it’s just a matter of taking advantage of what you can do with what is available.

Running on Servers, Move Layers to Cloud

Nothing prepares you for a studio buildout like shopping for an automobile. Entirely different scene, same familiar issues. Too many choices? Check. Not enough inventory? Check. Disrupted supply chains. Labor shortages. Your future and everything else riding on it. Check. Check. And check. Let’s also not forget about cloud or virtualization, the electric car of studios that has immediate and future implications.

Certainly, all the above make a good case for moving studios over to a cloud, even if that “cloud” is a server in your own facility. It just so happens that key broadcast functions now live in software and are easily ported from broadcast-specific hardware to commodity servers, as is the case with our Layers software suite. The mix engines for our WheatNet-IP audio console surfaces, for example, are built on Linux, which runs on the embedded chips in our mixing Blades just as readily as on a Dell or Hewlett Packard server. One server can run multiple Layers mixing instances for several consoles throughout your facility, plus serve Layers FM audio processing instances with full MPX out to the transmitter, as well as provisioning and metadata for multiple streams out to the CDN provider.

We can now do all that from a single server, but does that mean you should jump on the software-in-a-server bandwagon today? Not necessarily. We can think of four good reasons why you might consider adding a server with Layers mixing, processing and streaming to a WheatNet-IP networked studio in place of the equivalent in hardware.

- 1. You’re running out of space. One server running Layers can serve mixing instances for all the LXE and GSX consoles throughout a facility, saving the cost and space for each mixing engine that would otherwise sit in racks. Not having a mix engine in every single studio or a rackfull of them drawing electricity or heating up the room reduces the cost of cooling and electrical and it might even free up some room for another work area, like a voiceover booth.

- 2. You’re running out of time. Adding a new studio or consolidating stations can be done much faster in software than by adding hardware. Instances can be added to the server as needed for backend mixing, FM processing and stream provisioning. Plus, you can mix and control from a laptop, tablet or other glass or physical control surface − another time and money saver.

- 3. You need redundancy or expandability now. If you wanted to build in more redundancy, traditionally you’d budget for a spare mix engine for a few control rooms and/or a backup audio processor or streaming appliance. One server with Layers can now give you redundant mix engines for any control room that needs an emergency backup. That same server also can add an FM/HD audio processing chain as a backup to the main or add streams as you need them, such as for a special holiday program channel or ad promo. In fact, you can back up an entire WheatNet-IP studio facility with all the AoIP routing crossovers and configuration settings in one server, onsite or off. In some cases, there’s a financing advantage for a software backup system compared to a fixed studio (ask our sales engineers for details).

- 4. You need to be cloud ready. Maybe you’re thinking about a third-party cloud provider for the future or maybe onsite cloud is on management’s requirement list. This is an ideal starting point because you can host mixing, processing and streaming locally on your server or stack of servers now and, if or when the time comes, you can move all or part of Layers software offsite.

Still not sure? You’ll be glad to know that adding a server with Layers software suite is an option you can add to your WheatNet-IP audio networked studio now or down the road.

What is RIST and Why You Need It

It seems that network protocols are a lot like potato chips. You can’t have just one. Here’s what you need to know about the latest transport protocol and why everyone is talking about RIST for live streaming and moving media across the public internet.

If you’ve been following protocols for a while, you know that UDP multicasting is a simple message-oriented protocol used to transport audio and control data at very low latency. We can reduce WheatNet IP audio packet timing to ¼ ms for minimum latency in part because of UDP multicasting, which means that local studio controls and audio transport are almost instantaneous.

But what happens when we go beyond the studio network and want to live-stream audio and control data in real-time across the public network, where links are less reliable and distance adds more delay?

Enter RIST, or Reliable Internet Stream Transport, which adds error correction and packet recovery to UDP multicasting. RIST uses things like RTP sequencing to identify potential packet losses and multi-link bonding to guarantee media delivery over these public links with very little delay and often without having to compress or reduce the bits, and hence the quality of audio being transported. Based on established protocols widely adopted by the broadcast industry, including RTP and SMPTE-2022, RIST is interoperable with broadcast-grade equipment, including Wheatstone products and those of its partners. It is a relatively new arrival in the media transport world and it’s especially timely given the growing number of high-speed links that are now making it possible to stream at full audio bandwidth.

Or, as our senior streaming software engineer Rick Bidlack explained, “We have all this available bandwidth on public networks for getting the full audio bandwidth up to the cloud or wherever we want it. RIST just makes sure that it arrives there.”

Now part of our I/O Blade 4s, streaming appliances Wheatstream/Streamblade, and streaming software (Layers Stream), RIST provides WheatNet IP audio studios both the speed and reliability needed to take advantage of high-speed broadband public networks for live streaming, news feeds or sports contributions across distances. You can now stream audio from your WheatNet IP audio studio over a public high-speed link to a regional server or cloud data center at full audio bandwidth and 24-bit resolution without noticeable latency, depending on distance.

Opening up a RIST stream session from your Wheatstone AoIP streaming appliance or software creates a dedicated channel between a local IP address and an IP address on the far end and establishes a low-latency, high-quality connection between the two points. And because RIST interoperates with RTP/UDP at the transport layer, it integrates easily into WheatNet IP audio networked studios. For example, our Layers Stream software running in a data center can receive a stream via RIST as well as send a stream via RIST back to a Blade 4 in your studio to be routed, processed, and controlled locally through the WheatNet IP audio network.

RIST is fast becoming an important protocol for the next evolution in AoIP.

Cloud Control Just Got a Lot More 'Handy'

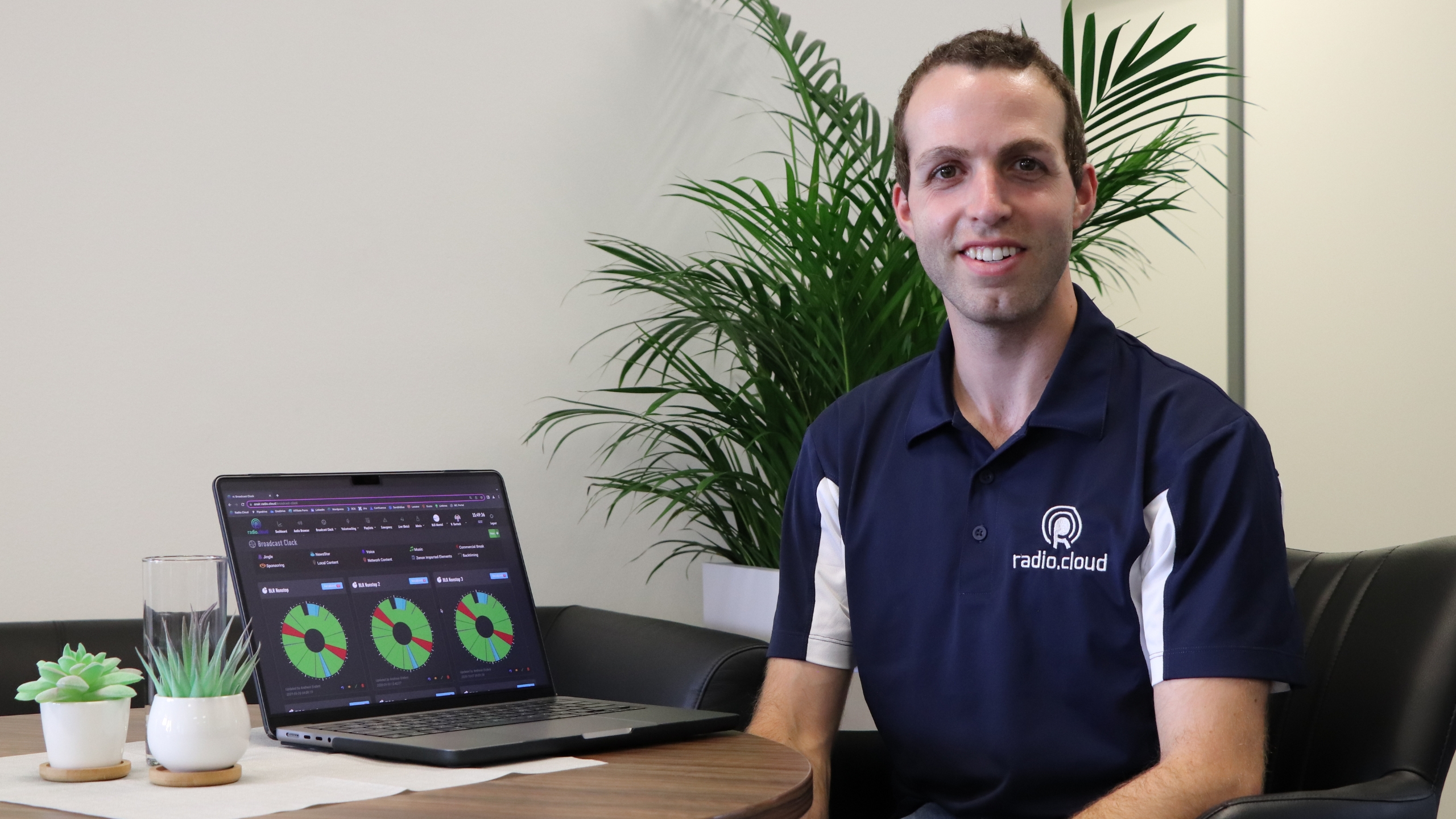

Radio.Cloud and Wheatstone partnership explained.

Finally, something new in the cloud world that you can put your hands on.

We’re referring to our LXE console surface, of course. Now that LXE fixed and Glass LXE surfaces can talk directly to Radio.Cloud’s Live Studio cloud software, we’re getting a lot more handy with cloud control.

Which all goes to say you can now produce live shows entirely in the cloud from your LXE in your studio or a Glass LXE from anywhere in the world.

Radio.Cloud’s Andrew Scaglione sat down with our Dee McVicker to explain this hybrid cloud model and why Radio.Cloud, the only cloud-native automation system certified by Amazon Web Services, chose Wheatstone as a technology partner.

DM: First, explain Radio.Cloud’s cloud-native approach to program automation. I understand this is an all-cloud automation system, from the ground up so to speak.

DM: First, explain Radio.Cloud’s cloud-native approach to program automation. I understand this is an all-cloud automation system, from the ground up so to speak.

AS: We are entirely cloud-native and can therefore take advantage of the microservices that AWS has developed. I believe we are the first and only radio playout system that is AWS certified, a lengthy process that means we are a full Amazon Web Services partner. We can take advantage of these very highly developed microservices, like S3 synchronized storage among several data centers and Lambda serverless requests, so there’s a lot less overhead processing data. What would normally take 10 to 15 seconds or even a full minute to process takes us a few milliseconds. That’s only possible because we took the cloud-native approach.

DM: Wait, do you mean that you can incorporate some of those AWS-developed services in radio automation?

AS: Right. For example, there’s this AWS transcribe microservice that can transcribe all your audio as it’s happening. Transcribe is especially cool because you can now do searches on text and then automatically broadcast content related to that text. You can avoid things like the network jock getting on and saying what a beautiful day it is followed by a local weather report predicting a huge storm. You’re able to look for tag insertion points and with machine learning, automatically put in content relative to that insertion point.

DM: So, you’re able to localize better?

AS: Not just localize, but hyper-localize because we can do so many more time-sensitive things. Like, a network might have two elements: a national and local element that are woven together in real-time by the cloud, one being a voice track that every affiliate gets and one being the local element that is different by affiliate. We are able to do this seamlessly and automatically, plus they can mix as much local and network into one clock cycle as they want, whether it’s 10 affiliates or 100 affiliates. You really can’t do that with the processing in a server.

You could probably do all that on a local server, but it wouldn’t be as fast or as good or be as seamless.

DM: Let’s talk about the live production end of things. Radio.Cloud started with automation in 2018 and recently expanded to Live Studio for live production, which is how Wheatstone got involved. (Wheatstone Announced Partnership with Radio.Cloud, resulting in Live Studio integration into Wheatstone AoIP consoles through our ACI protocol).

JH: One of the reasons for partnering with Wheatstone is so we can bring the cloud down to the broadcaster, to make it easier to interface to the cloud. That was important when we introduced Live Studio because we needed that control that only a console could do, and by extension the AoIP. The idea was, if you can do it through a network, you can do it through a cloud.

Integrating Wheatstone’s native AoIP with a cloud-native system like ours makes it so much easier for someone who wants to do a show live from the studio and maybe have a collaborator log in from their browser. Now they can pull up Radio.Cloud on a Glass LXE screen from wherever and control it with the Wheatstone board at the studio. The idea is that if you’re on the go, you can absolutely control it over a browser and can control our system over a touchscreen. But if you are in your home studio or at the station, you now have the ability to control our automation or Live Studio system with your console. You guys are thinking along the same lines as us; you’re also doing a hybrid model of virtual and traditional with virtual screens and fixed consoles, and we both seemed to be of the same mindset and it’s made a good partnership as a result.

This clip shows Radio.Cloud's Live Studio (on the right) interfacing with Wheatstone's Glass LXE virtual console.

DM: Great minds think alike! Before I move on from live production, I have to ask about latency.What can you tell us about delay as it relates to live mixing in a cloud scenario?

AS: Low latency is another advantage of being cloud-native. We can get that delay down to milliseconds and if we were to control a work surface console in your environment from our environment, you would actually see it happen in what you would perceive to be real time. We also are able to do a mix-minus of your voice so you don’t hear that in your headphone.

DM: That’s amazing. I can see why this is going to be a game changer for so many broadcasters. Thanks, Andrew.

Wheatstone Announces Partnership with RADIO.CLOUD

The result is radio's first cloud-native automation and AoIP in one.

Containerization As An Alternative To Virtualization

Having several containers running separate services can help with security protection and flexibility

Hybrid Models Serve Today's Studio Environment

Spinning up instances from your TOC server can be your gateway to the cloud

What We Learned About Cloud From Gamers

Giambo: “You can localize mixing fairly close to most major markets”

Connect with your Wheatstone sales engineer and go from concept to future ready!

Call +1 (252) 638-7000 or email [email protected]

Manufactured, shipped, and supported 24/7 from North Carolina, USA